You can't be agile without CI/CD and TDD (Revisited)

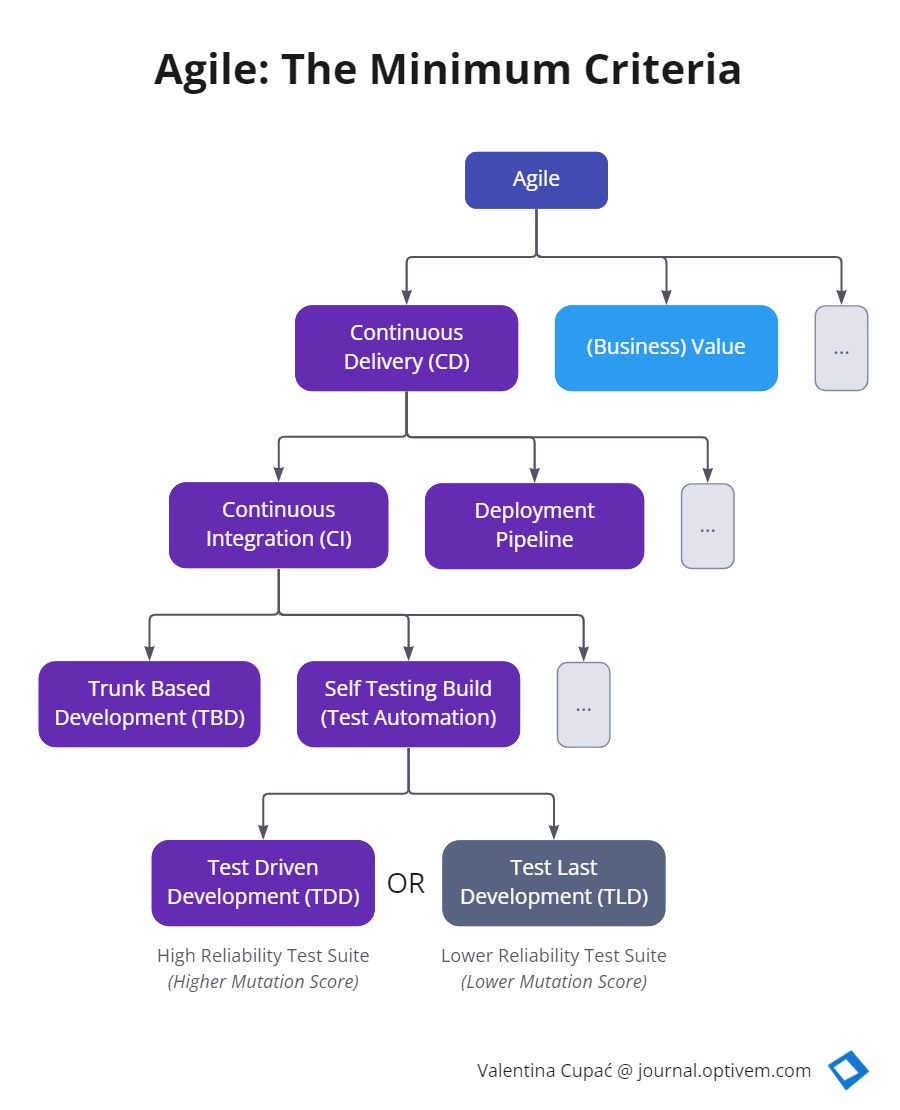

Can we be agile if we're not satisfying the first principle - Continuous Delivery? Continuous Delivery necessitates Continuous Integration. Continuous Integration necessitates Test Automation.

I had written a post on LinkedIn:

You can't be agile without CI/CD and TDD.

Unfortunately, agility is often "sold" as a PM silver bullet, e.g. that just adopting Scrum per see will accelerate software delivery. But, if there aren't technical standards in place regarding CI/CD & TDD, then, over time, quality will degrade and delivery will slow down - leading to product failure on the market.

Several months later, after 250+ comments and seeing this post circulating on LinkedIn during the past few days, I decided to write a follow-up commentary about that post. Why did I write it? What does it mean? I also wanted to expand it with some additional topics, including TBD.

Agile has lost its technical roots

Agile has lost its original meaning over the years. Most notably, Agile lost its technical roots. Agile has come to mean everything, anything, or nothing.

On LinkedIn, I’ve seen many people say that Agile is “just” a mindset and that technical practices are unnecessary.

Part of this is caused due to the prominence of Scrum. People (mistakenly) equate Agile with Scrum. Since Scrum does not prescribe technical practices, it meant that technical practices took the backseat, became ignored - and marked by people as “unnecessary“. This leads many to think that you can be agile without technical practices.

This problem is over a decade old. Fowler describes the Flaccid Scrum problem - what happens when Scrum is adopted without adequate technical practices - slow progress:

There's a mess I've heard about with quite a few projects recently. It works out like this:

They want to use an agile process, and pick Scrum

They adopt the Scrum practices, and maybe even the principles

After a while progress is slow because the code base is a mess

Again he explains the root cause of “After a while progress is slow because the code base is a mess“, here we can see the need for technical practices to ensure sustainable progress (to avoid a slowdown in feature delivery):

What's happened is that they haven't paid enough attention to the internal quality of their software. If you make that mistake you'll soon find your productivity dragged down because it's much harder to add new features than you'd like.

The problems outlined by Fowler are present in our IT industry today, and the condition worsens daily.

Many companies are adopting “half“ of Agile; they’re adopting just the mindset, or just the organizational aspects of Agile, without adopting technical practices. This article shows that sustainable delivery can NOT be achieved without adequate technical practices. One crucial technical practice that we’ll be focusing on is Continuous Delivery.

This brings us to Extreme Programming, which contains the necessary practices for achieving agility. Read the book Extreme Programming Explained: Embrace Change (Kent Beck) and the Extreme Programming website (Don Wells). Also, read Test Driven Development: By Example (Kent Beck).

The background story

Before becoming a Technical Coach, I had worked as a Software Developer for several years, later as a Technical Lead and Software Architect. What I witnessed: Scrum without technical practices led to a slowdown in feature delivery and team burnout. Any “success” was short-lived - perhaps several sprints. Then disaster hit. Later I discovered that Fowler had already described it a decade ago, in Flaccid Scrum.

Many Agile Coaches were just Scrum Coaches. I didn’t meet any XP Coaches. Indeed, during my junior years, I only heard the words: Agile, Scrum, and JIRA Backlog. I didn’t hear about XP.

I was fortunate to meet two Agile Coaches who had a technical foundation, and a background in both Scrum and XP - Bojan Spasic and Zoran Vujkov - and had conversations regarding the meaning of Agile: the technical roots of Agile; the necessity of technical practices to be Agile (particularly CI/CD and TDD); why feature branching (the famous GitFlow) is antithetical to CI, why we need TBD; why having Jenkins alone does not mean you’re doing CI; why test automation is a necessary condition for CI. Overall, the discussions went back to principles in the Agile Manifesto, re-instated the problems which Fowler described in Flaccid Scrum.

It was “common sense” (for me) that Scrum alone (without technical practices) can’t accelerate delivery. Technical practices are necessary, not optional.

So fast-forward some months later, I wrote a post on LinkedIn:

You can't be agile without CI/CD and TDD.

Unfortunately, agility is often "sold" as a PM silver bullet, e.g. that just adopting Scrum per see will accelerate software delivery. But, if there aren't technical standards in place regarding CI/CD & TDD, then, over time, quality will degrade and delivery will slow down - leading to product failure on the market.

After publishing that post, I received comments that ranged in the spectrum (paraphrased/aggregated):

Yes, you’re 100% right!

You’re right about CI/CD, but not about TDD

You’re wrong about both; CI/CD isn’t mentioned in the Agile Manifesto; automated tests aren’t mentioned either (let alone TDD)

Shortly afterward, I had exchanges with the following Agile Coaches & Trainers who understood and promoted the need for technical excellence: Michael Küsters, Marco Consolaro, Alessandro Di Gioia, Alexandre Cuva, Yoan Thirion, Michael Küsters, and Thabet Mabrouk.

I also met Product Owners who advocated for technical excellence - notably Victor Billette de Villemeur and Alex Covic. I recommend the article The importance of tech excellence — there’s more in agility than only ceremonials by Victor Billette de Villemeur because it describes the need for technical excellence in an easy-to-understand way (esp. for management audiences).

What is “agile“?

I’m specifically referring to agility within software development, as described in the “Manifesto for Agile Software Development” (aka Agile Manifesto). So for the purposes of this article, the words “agile“, “Agile“, “agility“, “Agility“ all refer to the definition in the Agile Manifesto, and not to any other definitions. I’m also not distinguishing between “agile“ as an adjective versus verb versus noun.

Let’s look at the first principle in the Agile Manifesto:

Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.

Let’s break it down:

So what’s the whole purpose of agility? It’s to satisfy the customer.

And how do we satisfy the customer? Through continuous delivery.

Continuous delivery of what? Of valuable software.

How can we claim to be agile if we do not satisfy the first principle of agility?

What is “Continuous Delivery”?

The first principle of the Agile Manifesto mentions Continuous Delivery, but what does it mean?

Jez Humble defines Continuous Delivery (continuousdelivery.com):

Continuous Delivery is the ability to get changes of all types—including new features, configuration changes, bug fixes and experiments—into production, or into the hands of users, safely and quickly in a sustainable way.

Our goal is to make deployments—whether of a large-scale distributed system, a complex production environment, an embedded system, or an app—predictable, routine affairs that can be performed on demand.

We achieve all this by ensuring our code is always in a deployable state, even in the face of teams of thousands of developers making changes on a daily basis. We thus completely eliminate the integration, testing and hardening phases that traditionally followed “dev complete”, as well as code freezes.

Martin Fowler writes a summarized version:

Continuous Delivery is a software development discipline where you build software in such a way that the software can be released to production at any time.

What are the minimal activities required to achieve Continuous Delivery? (see MinimumCD):

Continuous Integration (CI)

Deployment Pipeline (aka Application Pipeline)

Note: I’ve listed just a subset of minimum activities; see MinimumCD for the full list of minimum activities.

There are many misconceptions about Continuous Delivery. In this article, I cover the essentials of Continuous Delivery, but I highly recommend reading Bryan Finster’s article Continuous Delivery FAQ, most notably emphasizing “continuous product development and continuous quality feedback” to “accelerate delivery to reduce the cost and improve the safety of change“:

Continuous delivery is continuous product development and continuous quality feedback. The robots enable us to standardize and accelerate delivery to reduce the cost and improve the safety of change. This makes it viable for us to deliver very small changes to get feedback on quality immediately. People frequently underestimate the definition of “small”. We aren’t talking epics, features, or even stories. We are talking hours or minutes worth of work, not days or weeks. A CD pipeline includes the robots, but it starts with the value proposition and ends when we get feedback from production on the actual value delivered so that we can make decisions about future value propositions. This takes teamwork from everyone aligned to the product flow and unrelenting discipline for maintaining and improving quality feedback loops.

For further learning, see materials by Jez Humble and Dave Farley:

Read the website continuousdelivery.com by Jez Humble

Watch YouTube videos Continuous Delivery by Dave Farley

Read the book Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation by Jez Humble and Dave Farley (see the introduction by Fowler)

A summarized version of the minimum conditions for CD is here:

Read the website MinimumCD, whose signatories include Dave Farley

Some people have objections regarding the currently accepted definitions of Continuous Delivery. Andrea Laforgia provides a good summary that clarifies possible misconceptions regarding Continous Delivery (CD) within the Manifesto for Agile Software Development (MfASD):

Some people object to Farley-Humble's CD equating to Agile's CD. They say the MfASD merely meant "very frequent".

I disagree. I think the definition of a "release process that is repeatable, reliable, and predictable, which in turn generates large reductions in cycle time, and hence gets feature and bugfixes to users fast" (CD, p.17) is perfectly aligned with the MfASD and trumps the idea of mere "very frequent" releases. So yes, it is legitimate to assume that agile software development, today, should imply Continuous Delivery as defined by Farley-Humble.

I’d also like to add a historical note regarding the term “continuous“. Grady Brooch coined the term Continuous Integration in 1991; it is referenced within Extreme Programming (page Integrate Often), and the Agile Manifesto was published in 2001. The authors of the manifesto have chosen to use a very strong word - “continuous delivery“ (rather than “frequent delivery“) within the first principle of the manifesto. The word “continuous“ is stronger than “frequent“. Indeed, let’s also link it to the word “continuous function“ in mathematics, represented as an unbroken curve. That’s what we’re striving to achieve here, too, in the smallest possible increments.

What is “Continuous Deployment“?

The scope of this article is just Continuous Delivery (which includes Continuous Integration). Continuous Deployment is NOT in the scope of this article (because Continuous Deployment is not necessitated by any of the principles in the Agile Manifesto.

So why am I mentioning it? Because many people mix the words “Continuous Delivery“ with “Continuous Deployment”!

Please don’t confuse Continuous Delivery with Continuous Deployment! Continuous Delivery means that we can release to production at any time, i.e., our system is in a releasable state, but not that we’re continuously releasing to production! On the other hand, Continuous Deployment means that we’re releasing to production continuously, i.e., every change is released to production. Continuous Deployment does require Continuous Delivery.

Read more on Fowler’s article where he writes, “Continuous Delivery is sometimes confused with Continuous Deployment“ and then explains the difference.

What is “Continuous Integration”?

From the above, we see that Continuous Integration is one of the minimal criteria to satisfy Continuous Delivery.

MinimumCD provides a formal definition:

CI is the activity of very frequently integrating work to the trunk of version control and verifying that the work is, to the best of our knowledge, releasable.

Fowler explains Continuous Integration:

Continuous Integration is a software development practice where members of a team integrate their work frequently, usually each person integrates at least daily - leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible.

Minimum activities for CI include (see MinimumCD):

Trunk Based Development (TBD)

Minimum Daily Integration to the Trunk

Test Automation (Self-Testing Build)

Note: I’ve listed just a subset of minimum activities; see MinimumCD for the full list of minimum activities.

What is “Trunk Based Development“?

Trunk Based Development (TBD) is defined on MinimumCD:

Trunk-based development is the branching pattern required to meet the definition of CI.

We can also see some more detail on trunkbaseddevelopment.com:

A source-control branching model, where developers collaborate on code in a single branch called ‘trunk’ *, resist any pressure to create other long-lived development branches by employing documented techniques.

As described on MinimumCD, there are two ways to achieve TBD - we could either directly integrate all changes into the trunk, or if we’re using branches, we’d have to use short-lived branches.

This helps ensure we meet the requirements for CI, which require minimum daily integration to the trunk.

Trunk Based Development meets this condition because all changes are directly integrated into the trunk, or short-lived branches are integrated daily

Feature Branching does NOT meet this condition because it involves longer-lived branches; there is no daily integration into the trunk

What is a “Self-Testing Build”?

A self-testing build is a build that includes the execution of automated tests as part of the build. This means it’s not just about whether our program compiles and runs but about verifying the program's behavior as it executes.

Now, how to achieve a self-testing build? A self-testing build requires us to write automated tests to verify that our code behaves per executable specifications.

Why Test Automation? Why should developers write tests - why not rely on manual QA Engineer testing? The importance of test automation is described by Eric Mignot in How to make Agile work?

One key I believe is the sustainable pace practice, from the second circle. One team could rely on manual testing during one iteration or two, but what happens after 15 iterations, after 42 iterations? What if the manual regression testing eats all the time? You end up with a dead product that you can not extend anymore.

Why TDD?

We could produce self-testing code in the following ways:

Test Driven Development (TDD)

Test Last Development (TLD)

Test First Development

Test Driven Development (TDD)

The TDD practice naturally leads us to produce self-testing code. The reason is that we have to write a failing test before we write the code to make the test pass. This ensures that the code is self-testing.

Test Last Development (TLD)

In TLD, tests are written after code. But when exactly are they written? There is no guideline regarding when - or if ever - the tests will be written. We could write a test immediately after we’ve written some code, or we could write a lot of code and then write tests when we finish some feature. Or we could further delay the writing of the tests… indefinitely, which means we’d never write tests at all; in that case, the result would be no tests or a test suite with many holes.

Thus, with TLD, in the best case, tests will be written immediately after the code; thus, the code will be self-testing. In the worst case, the writing of tests will be indefinitely delayed, which means most of our code will not be self-testing. Even when we do write tests, we might not write any assertions at all - the tests will be green, code coverage can be 100%, yet we’re not testing anything! The tests are not protecting this against regression bugs.

These “holes“ in the test suite can be detected by running Mutation Testing. Typically, teams using Test Last Development will get a low Mutation Score (even if they have 100% Code Coverage!).

So we’d have to run Mutation Testing - but Mutation Testing is slow, it is not practical to run it regularly! So then we must “live with“ holes in our test suite until the next cycle of Mutation Testing. This means more holes in the test suite at any given time point. Furthermore, with TLD, we’d have to enforce the writing of tests (and verification via Code Coverage and Mutation Testing) during merges.

See also Uncle Bob’s post Mutation Testing where he describes the possibility of using Mutation Testing with test last development:

A fundamental goal of TDD is to create a test suite that you can trust, so that you can effectively refactor.

For years the argument has been that test-after simply cannot create such a high reliability test suite. Only diligent application of the TDD discipline has a chance of creating a test suite that you implicitly trust.

As hard-nosed as I am about TDD as a necessary discipline; if I saw a team using mutation testing to guarantee the semantic stability of a test-after suite; I would smile, and nod, and consider them to be highly professional. (I would also suggest that they work test-first in order to streamline their effort.)

Test First Development

Generally, most people talk about TDD vs TLD. The problem is that sometimes when people refer to TDD they might be referring to just plain “Test First“ Development. In Test First Development, we can write multiple tests at once, and then write all the source code to satisfy the tests. However, in TDD, we must write strictly one test at a time (and then write code to make the test pass); in TDD, we are not allowed to write more than one test at a time.

Thus, TDD is incremental, but Test First does NOT require incrementalism!

The problem with Test First, due to the fact that it is NOT incremental, is that we can easily write more code than was required to make the tests pass. The test suite and the codebase are NOT in equilibrium because the code exhibits more behavior than the behavior verified by the test suite.

This leads to a lower Mutation Score; our test suite does NOT protect us against regression bugs. This is the same as the problem of Test Last Development - the test suite is unreliable at protecting us against regression bugs. We see this through a low Mutation Score, as in Test Last Development.

So what’s the impact?

With TDD, the practice guarantees we’ll get tests asserting the expected behavior

With TLD, there is no such guarantee, there is no guarantee that tests will be written at all (so we’d need to enforce Code Coverage checks upon merge), and there is no guarantee that the tests will assert the expected behavior (this is evident through a low Mutation Score, so we’d have to run Mutation Testing to see missing assertions, but Mutation testing is slow-running and infrequently run, so we end up with longer-lived holes)

With Test First Development, we will face similar problems as TLD - a low Mutation Score, because the tests are not asserting the full extent of the behavior of the code

TDD is the most straightforward way to get a reliable test suite.

For further details regarding the comparison of Test First vs Test Last, incremental vs batch testing, please see my article A Model for Test Suite Quality.

In conclusion, there are two ways to achieve a Self Testing Build with a roughly similar level of reliability:

TDD - convenient

TLD with Code Coverage and Mutation Testing on merge requests - painful!

Test First with Code Coverage and Mutation Testing on merge requests - painful!

Disclaimer: Above, we considered TDD only in the context of achieving self-testing code because self-testing code is a criterion for Continuous Integration. Hence from that perspective, we focused only on the aspect of how TDD enables us to produce a reliable test suite (evidenced by a high mutation score), so in that context, we see TDD as one of the ways to produce a test suite, i.e., a testing approach. However, to avoid any misinterpretation, I want to emphasize the essence of TDD - TDD is about incrementally driving development driven by executable specifications. A reliable test suite (and high code coverage & mutation score) are simply a side-effect but not the target of TDD. We may explore this in a subsequent article.

What is a “Deployment Pipeline“?

As referenced from MinimumCD, a deployment pipeline was described in the Continous Delivery book by Jez Humble and Dave Farley:

At an abstract level, a deployment pipeline is an automated manifestation of your process for getting software from version control into the hands of your users. Every change to your software goes through a complex process on its way to being released. That process involves building the software, followed by the progress of these builds through multiple stages of testing and deployment. This, in turn, requires collaboration between many individuals, and perhaps several teams. The deployment pipeline models this process, and its incarnation in a continuous integration and release management tool is what allows you to see and control the progress of each change as it moves from version control through various sets of tests and deployments to release to users.

You can see a summarized version of a Deployment Pipeline by Fowler too.

Now to achieve automation, we require some tools; an example of a tool is Jenkins which is an automation server that we can use to help us build a Deployment Pipeline (there are also alternatives, such as GitLab, GitHub Actions, AWS CodePipeline, Azure Pipelines).

As a corollary, having Jenkins per se (or any other build server) does NOT imply that we’re doing CI/CD. This is a common misconception that many development teams believe just by setting up a Jenkins pipeline, they’re practicing CI/CD. Based on a commentary with Karel Smutný and Marc Loupias regarding team’s misunderstandings of tools versus practices, I wrote a sample dialogue that illustrates a really common situation:

Question: “Are you practicing CI/CD?”

Answer: “Yes, we are. We have a Jenkins CI/CD pipeline“

Question: “Which branching strategy do you use? TBD?“

Answer: “No, we’re using Feature Branching - GitFlow.“

Question: “How often do you integrate? Daily?“

Answer: “No, it depends on the Feature, generally once a month.“

Question: “Do you have write tests for your code?“

Answer: “No, we rarely write tests for our code. The QA Engineer does it “

Yes, a deployment pipeline is required to achieve Continuous Integration, but it is not enough. If we have Jenkins but aren’t practicing TBD, aren’t integrating to the trunk daily, and aren’t writing automated tests, then we are NOT practicing CI.

Necessary versus Sufficient

Continuous Delivery is necessary to achieve agility:

Continuous Delivery (CD) is necessary (to satisfy the first principle of the Agile manifesto).

Continuous Integration (CI) is necessary (to satisfy minimal criteria for CD)

Deployment Pipeline is necessary (to satisfy minimal criteria for CD)

Trunk Based Development (TBD) is necessary (to satisfy minimal criteria for CI)

Daily Trunk Integration is necessary (to satisfy minimal criteria for CI)

Self Testing Build (Test Automation) is necessary (to satisfy minimal criteria for CI)

Now to achieve Self Testing Build, a reliable test suite, we have two paths:

We could use TDD, which naturally leads to a reliable test suite

Or we could use TLD with additionally having “checkpoints“ during merge - which would include Code Coverage and Mutation Test Scores, and then the developers would have to add missing tests, including adequate assertions retroactively

Note: I also had some exchanges with Andrea Laforgia, and I remember he posted about a similar theme - see recent commentary.

But is Continuous Delivery sufficient? Let’s say we have the best CI/CD pipeline in the world, 100% Code Coverage, 100% Mutation Score, is it enough? No, it’s not, we could be “continuously delivering“ low-value software, not responding to customer feedback, etc.

You can’t be agile without Continuous Delivery.

But just because you achieve Continuous Delivery doesn’t mean you’re agile.

This is why Continuous Delivery alone, is not sufficient. As was commented by Igal Ore, if we have just CD practices but Value Streams are ignored, then we could just be generating waste efficiently, also thanks to him for recommending the book Driving DevOps with Value Stream Management. In a similar vein, Tom Hoyland commented “I’ve seen teams with CI/CD, TDD and BDD, with K8s everywhere and some were the least agile teams I’ve encountered.“.

To summarize the distinction between “necessary“ versus “sufficient“ in this context: Continuous Delivery is a necessary condition for agility but NOT sufficient.

Here we covered just a subset of minimal criteria to be able to satisfy the first principle of the Agile Manifesto. Is this all? No. To claim to be agile, means satisfying all the other principles and values outlined in the Agile Manifesto.

But what about Scrum?

Notice that I didn’t mention Scrum anywhere above.

You CAN be agile WITHOUT Scrum. You don’t need sprints. You don’t need Scrum roles or Scrum events.

Also, just to add: You don’t need JIRA. You don’t need Story Points.

The essence of agility is the continuous delivery of value.

Can you be agile without Continuous Delivery? NO.

Can you be agile without delivering Value? NO.

But if Scrum is not the essence, why are most Agile Transformation consultancies selling Scrum? Why isn’t anyone selling Continuous Delivery? Because Scrum is much easier to “sell”, it’s much easier for management to “buy“ Scrum. That’s why Agile Transformations start well, but in the absence of Continuous Delivery practices, the delivery cannot be sustained over the long term!

I repeat: The essence of agility is the continuous delivery of value.

On a historical note (thanks to Eric Mignot in How to make Agile work?), referring to the authors of the Agile manifesto - and what happened afterwards:

And after writing the manifesto, they continued to challenge each other. As an evidence of this, consider the letter that Martin Fowler wrote in 2009 about flaccid Scrum which led Ken Schwaber to create scrum.org.

In this letter, Martin emphasizes the need for good technical practices and Extreme Programming as a relevant starting point.

In Eric’s article, you’ll find the article to Fowler’s FlaccidScrum article where Fowler talks about the problems faced by projects which adopted Scrum without technical practices:

There's a mess I've heard about with quite a few projects recently. It works out like this:

They want to use an agile process, and pick Scrum

They adopt the Scrum practices, and maybe even the principles

After a while progress is slow because the code base is a mess

He also writes about the difference between Scrum & XP:

Scrum is process that's centered on project management techniques and deliberately omits any technical practices, in contrast to (for example) Extreme Programming.

Scrum is just XP without the technical practices that make it work.

The Why: Sustainable Product Delivery

As Simon Sinek says: “Start with Why”. Well, here, I’m going to add a “twist“, and I’ll end with the why.

In this article, I used a deductive approach, I started with the first principle in the Agile Manifesto:

Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.

The whole set of “minimal criteria“ derived afterward was to demonstrate a subset of minimal criteria needed to achieve “continuous delivery“.

Ok, great, derivation based on principles, but so what? Why? And what happens if we don’t achieve continuous delivery? In the absence of adequate technical practices:

over time, quality will degrade and delivery will slow down - leading to product failure on the market.

That’s the really scary part. Continuous Delivery is essential. Without practicing Continuous Delivery, early development speed is just an illusion, it cannot be sustained in the long run. The reason is that quality drops, which causes a slow down in delivery until one day, it reaches a halt. That’s when we reach product failure.

Appendix: Why did I write this revised article?

When I wrote my first post about this, I simply stated “You can’t be agile without CI/CD and TDD“, but I didn’t explain how or why. This was one of the reasons why I wrote this revised article.

I saw in the comments that there was disagreement regarding the interpretation of the first principle within the Agile Manifesto, for example: is CI/CD written in the manifesto? I now want to go back to some comments which triggered me to write the revised article.

Maarten Dalmijn and Willem-Jan Ageling are prominent writers on LinkedIn regarding the topic of Scrum, I highly recommend following their work.

Now, we do have a difference in perspective when it comes to the necessity (or non-necessity) of technical practices in agility, so below I’m quoting some extracts of the discussion from the original post

The comment I received from Maarten Dalmijn:

Yes, you can be Agile without CI/CD and TDD.

You can also be extremely not Agile with CI/CD and TDD.

CI/CD and TDD can enable an Agile way of working, but it doesn't lead to an Agile way of working.

Followed by this subcomment:

Early and continuous delivery of value is not the same as CI/CD, as otherwise it would be phrased as such. You could release a new version once per week manually.

Maybe that isn't early and continuous enough for you, but slightly deviating from a single principle doesn't immediately mean it's not Agile. That's too harsh. There are far worse offenses.

I also believe the main point is about value, and producing a product increment and putting it into PRD doesn't necessarily mean you are delivering value either.

"Deliver working software frequently, from a couple of weeks to a couple of months, with a preference to the shorter timescale.

It shows Agile Manifesto is from a different time. Releasing every second week was fast back then. CI/CD didn't exist in those days.

The comments above are one of the reasons why I decided to write this follow-up article:

Yes, you can be Agile without CI/CD and TDD.

In this revised article, we saw how CI/CD is traceable back to the first principle of the Agile Manifesto, which uses the term “continuous delivery“; satisfying “continuous delivery“ implies “continuous integration“; these are known as CI/CD practices.

We saw that “continuous integration“ necessitates test automation; and that the most effective path to a reliable test automation suite is through TDD; though another alternative is TLD with mutation testing (as a less effective route to a test automation suite). So, since test automation is required, we need either TDD or TLD. TDD is the more effective way in leading to a robust test suite.

You can also be extremely not Agile with CI/CD and TDD.

CI/CD and TDD can enable an Agile way of working, but it doesn't lead to an Agile way of working.

I agree with this statement, see the section “Necessary versus Sufficient”. So yes, we can practice CI/CD and TDD but if we’re not maximizing business value, if we’re not gathering feedback from users or just ignoring feedback, then we’re not agile. CI/CD and TDD are parts of the jigsaw puzzle, I explained in this article why we need them, but I’m not saying they-re enough - they’re not enough.

Early and continuous delivery of value is not the same as CI/CD, as otherwise it would be phrased as such.

Regarding terminology, the word “continuous delivery“ is equivalent to CD. “Continuous delivery“ implies “continuous integration”, abbreviated as CI. Thus, in the first principle, it could have been written either “continuous delivery“ or “CD“ or “CI/CD“. Fowler was one of the co-authors of the Agile Manifesto, and amongst other resources, I’ve referenced his article regarding the meaning of “continuous delivery“.

You could release a new version once per week manually.

We could also do regression testing manually. So, both testing and releases could be done manually or could be done in an automated way. Manual releases are not sustainable and also don’t conform to the definitions. Continuous Integration, by definition, necessitates test automation. Continuous Delivery, by definition, necessitates automation via a deployment pipeline.

Maybe that isn't early and continuous enough for you

We need to separate the concept of Continuous Delivery versus Continuous Deployment. The Agile Manifesto ONLY necessitates Continuous Delivery (our software is in such a state that we COULD release it to production at any time); it does not necessitate Continuous Deployment (we have chosen to RELEASE the software to production at every commit).

Since Continuous Deployment is NOT required, it’s fine whether we release once a week, or every two weeks, or every month, etc.

but slightly deviating from a single principle doesn't immediately mean it's not Agile. That's too harsh. There are far worse offenses.

Prior to listing the principles, the Agile Manifesto states “We follow these principles:“. Now the word “follow“ is imperative. The first principle starts with “Our highest priority is “ (“Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.”). So this first principle is indeed the highest priority principle.

Ok let’s say we don’t follow principle #1; what about the rest? By failing to satisfy principle #1, the development team:

Cannot satisfy principle #2 (welcoming changing requirements) because, without test automation (a requirement of CI), change is risky and unwelcome.

Cannot satisfy principle #3 over the long run, well initially, in the short term principle #3 might able to be satisfied, but later it cannot be satisfied if we don’t have test automation and release automation (take a look at a product company’s QA manual regression testing cycle)

Cannot satisfy principle #7 because without test automation (a requirement for CI) we actually don’t know if our software works

Cannot satisfy principle #8 because without test automation (a requirement for CI) the development pace is not sustainable, and feature delivery will slow down due to the accumulation of bugs and manual testing

Cannot satisfy principle #9 because without test automation, we do not have the protection of a regression test suite; thus we cannot refactor or improve the design, and we cannot attain technical excellence

Problems in satisfying principle #10, maximizing the amount of work not done, because developers are wasting time manually debugging and testing, manually releasing

Principle #12, reflecting on how to become more effective and change behavior, necessitates that at least the foundational wasteful activities (manual debugging, manual regression testing, manual releases) have been resolved before we go on further

I also believe the main point is about value, and producing a product increment and putting it into PRD doesn't necessarily mean you are delivering value either.

I agree that the main point is about value. Indeed, “value” is mentioned in the first principle.

"Deliver working software frequently, from a couple of weeks to a couple of months, with a preference to the shorter timescale.

It shows Agile Manifesto is from a different time. Releasing every second week was fast back then. CI/CD didn't exist in those days.

Releasing to production every second week is fine. I wrote separate sections to distinguish between Continuous Delivery versus Continuous Deployment. The first principle only necessitates that the software can be released to production, but not that we’re actually releasing to production.

Regarding the statement “CI/CD didn't exist in those days.“. Let’s see if CI/CD existed back “in those days“ (the days of the Agile Manifesto, 2001):

Continuous Integration existed a decade before the Agile Manifesto. The term Continous Integration (CI) was coined by Grady Brooch in 1991. Extreme Programming started in 1996.

Extreme Programming included practices of Continuous Integration (see pages in XP: Integrate Often and Dedicated Integration Computer).

Extreme Programming also included Continuous Delivery practices (see Make frequent small releases). On that page, we see the emphasis on frequent releases. Safely achieving this frequency (the page mentions some companies even doing daily releases back then!) can be done only with deployment automation.

Extreme Programming recommended 1 week iterations (see Iterative Development). From a technical perspective, such frequency is not sustainable achievable without test automation and deployment automation - necessitating CI/CD practices.

Furthermore, Jez Humble mentions his experiences in Continuous Delivery, starting from 2000 at startup, then years later followed by work at ThoughtWorks. Regarding their Continuous Delivery book, the article states:

In the course of fixing that and a number of similar projects, Jez Humble and his co-worker Dave Farley distilled the ideas and experiences of colleagues involved in those projects into the Continuous Delivery book.

The tools back in those days were indeed much more primitive compared to the tools that we have. Build automation and deployment automation tools started as a home-grown solution before emerging as open source widely available tools (read some history here, including Fowler’s role in promoting CI and the subsequent development of open source tools).

To summarize, the concepts behind CI/CD are traceable back to Extreme Programming. Tools back then were primitive, and over time the tools improved, but the essential practices and concepts already existed. The Continuous Delivery book published a decade after the Agile Manifesto is a collection of a decade of experience by its authors in applying CD across companies.

The underlying essential concepts already existed in XP, but the tools to support them evolved over time, and accelerated adoption through the surge of open source tools for deployment pipelines.

Thank you! This is brilliant