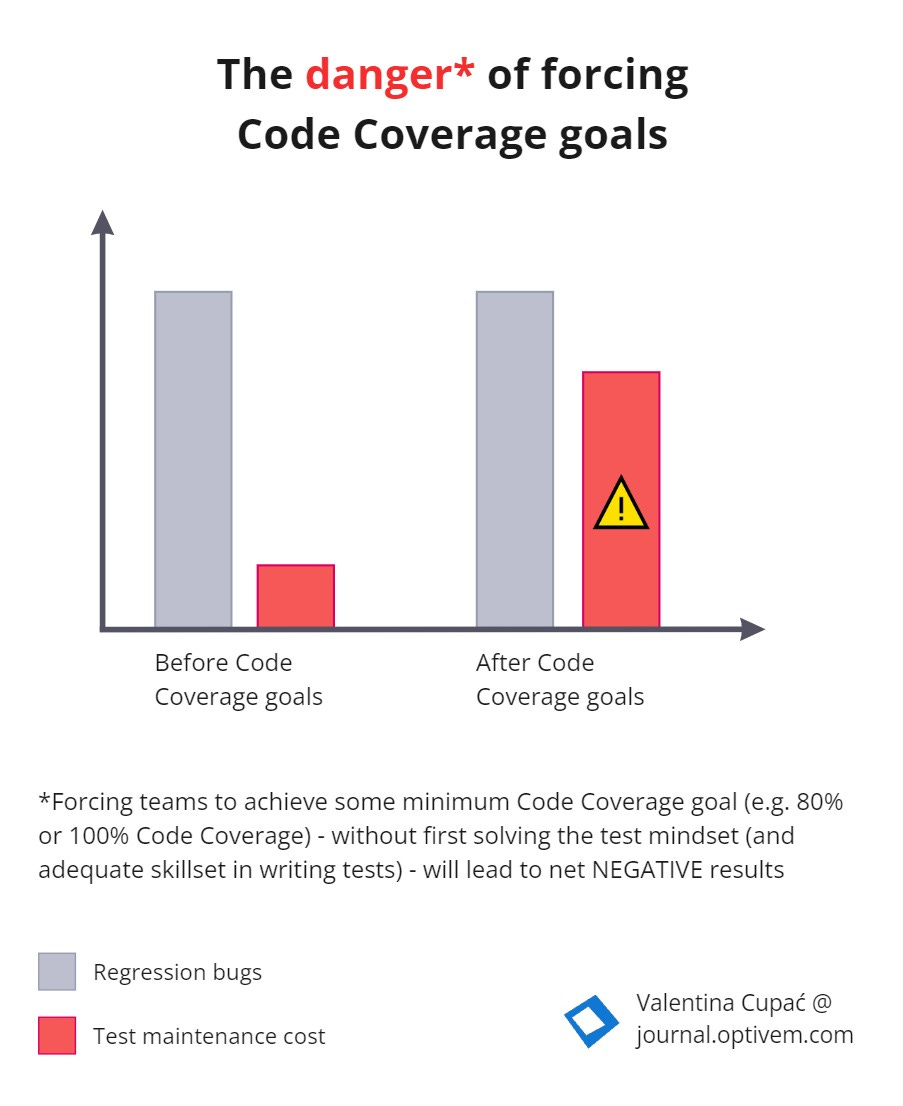

Don't chase Code Coverage goals

A mistake made by engineering managers is to set an arbitrary minimum code coverage metric (e.g. minimum 80% coverage) to teams who do not have the adequate skillset - leading to bad consequences!

Robert was an engineering manager. There was a problem he couldn’t solve - software bugs kept rising. It was getting out of control.

QA Engineers had trouble keeping up with the manual regression testing. The only automated tests are some e2e UI tests written by the QA Engineers, but those are fragile and slow.

The developers didn’t write any tests anymore.

Well, once upon a time, they did attempt to write some tests. But it went really bad. It took so much time to write the tests. The tests kept on breaking. The tests weren't readable either. It was a hassle, so they just gave up because “tests don’t work around here.”

The developers weren’t writing any tests

Robert faced the following problems:

Robert told the development team to write tests, but they resisted writing tests; they said they didn’t have the time to write tests (nor had the skillsets)

Robert wanted to have an overview of much code was tested; he was worried that he had no insight into the quality of the test suite

The QA Engineers couldn’t keep up with all the manual regression testing and were complaining to Robert that the developers weren’t testing their changes properly, but the developers said they don’t have the time for testing and that regression testing is solely QA's responsibility! Hm, who’s at fault?

Code Coverage comes to the rescue!

One day, Robert went to a conference, where he found THE answer to all his problems: enforcing Code Coverage metrics! He heard we can use code coverage to measure whether our tests cover the code.

So then, achieving 100% code coverage should be the goal, right? 100% code coverage means we have a high-quality test suite?

Robert thought this was a “quick and easy“ solution to “measure“ the test suite's quality. By measuring code quality, he could gain transparency regarding whether they’re writing enough tests - are the tests covering the code?

Mandating a minimum of 80% Code Coverage

Robert returned and announced the new standard to the team - 100% code coverage is the target goal for the next quarter. Anything less than 80% would mean performance appraisal.

So, the team was given the code coverage goal, but they didn’t have the skillset in writing tests and weren’t provided any form of support regarding acquiring those skillsets. They also didn’t know what Code Coverage meant besides being a formal target. This is what they did:

They executed the Code Coverage tool

They read the Code Coverage results - the results showed % classes covered, % methods covered, % lines covered, and % branches covered

For every class and every method with less than a 100% score, they would write a test for each class, and write test method(s) that would execute the corresponding production method(s) and also mock out any dependencies

After they reached 100% Code Coverage for everything - because the tests were executing every line and every branch

The illusion of success

They were happy with themselves - target achieved!

Even though writing tests was a chore (and felt purposeless), at least they hit the target mandated by management.

Robert looked at the Code Coverage report: 100%.

But it was just an illusion of success.

The reality became even WORSE

The reality was that the team didn’t move forward; they moved backward.

The situation was WORSE than before.

The bug count remained just as high as before. But why? Wasn’t 100% Code Coverage supposed to mean that we have a higher quality test suite - so then it should have caught regression bugs?

Their delivery speed went down - their tests were tightly coupled to structure (that was the only way they knew how to write tests); by changing one method, several tests would break

The team resisted writing tests even more - it felt like a waste of time; it was just a formality to reach some arbitrary score, and there weren’t any benefits; now, they were even more demotivated

But why didn’t Code Coverage solve the problem?

The short story above was based on experiences shared by Vladimir Khorikov (see Unit Testing Principles, Practices, and Patterns, 1.3.3 Problems with coverage metrics) as well as my own experiences, and this story has resonated with many other people. Indeed you try googling for “how to cheat Code Coverage“ and you’ll find plenty of similar stories… thus there is nothing that’s new nor unique about this situation, in fact the problem is well known!

Why wasn’t the test suite able to catch regression bugs even though code coverage was 100%? Why did the delivery slow down, why were these new tests so expensive to maintain? And how was he going to solve the team morale drop?

Robert had introduced the code coverage minimums in a well-intention way. But now, he had created three new problems to solve.

He had good intentions; he tried to solve a problem… but made it even WORSE?!

But why? What’s the root cause?

If Code Coverage wasn’t the solution, then what is the solution? Find out in our follow-up article https://journal.optivem.com/p/code-coverage-vs-mutation-testing