TDD in Legacy Code Iceberg

Many companies try to introduce TDD through a 2-5 day TDD Training Program. But the foundational skillsets in Effective Tests and Effective Code are missing. TDD then doesn't work! How to solve this?

"TDD does NOT work!"

Once upon a time, a team was working on a legacy project. The code was messy - it was a big ball of mud. There were some automated tests, but no one really trusted them - instead, manual regression testing was the only thing they could rely on. As the product grew, as more developers joined, this became an even BIGGER problem.

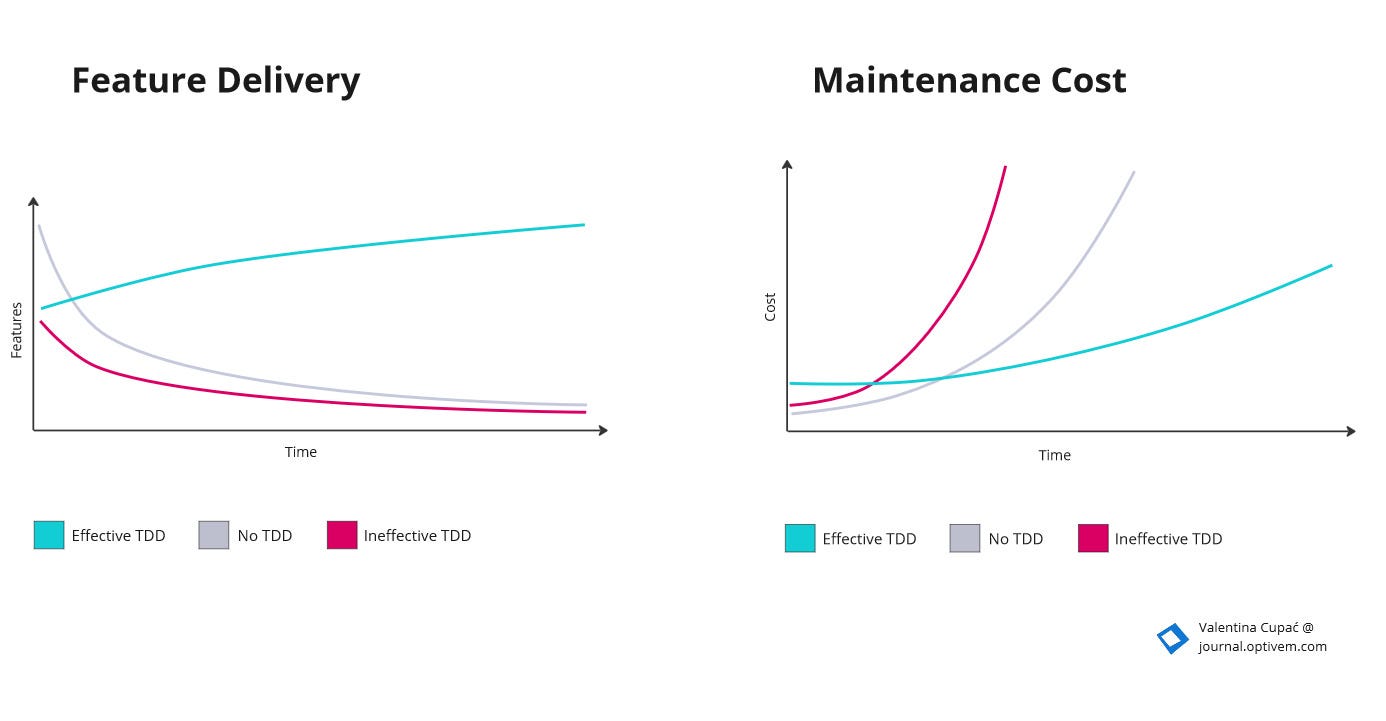

The team was WASTING a lot of time solving bugs, and there was not much time for features - they spent 80% of the sprint solving some bugs (both new bugs and regression bugs) and maybe 20% on features. The Product Owner started complaining why delivery is so slow and why there are so many bugs.

A manager heard that TDD is the solution to the problems above.

So the manager sent the whole team (and mandated all the other teams too) to go on a 2-5 day TDD Training. So the teams went on the TDD Training, they watched the trainer apply TDD on a simple demo project. It all looked great on the demo project.

But then on the real project, it was challenging - the real project was much more complex than the demo project AND the real project was a legacy app and not a greenfield project like illustrated during training.

So the team concluded: "TDD does not work!"

So how can we actually introduce TDD successfully? We have to solve foundational problems first:

Testable Architecture

Effective Tests

Effective Code

They are the foundational skillsets that are prerequisites for TDD. In legacy applications, they could be practiced within the TEST LAST approach.

Only after the team has effective skillsets in those foundational practices, then we could say they can practice effective TDD. In TDD, we use those same skillsets but just with a different sequence (tests before code) and incrementally (test then code - RED-GREEN-REFACTOR, rather than batch of code then batch of tests).

Problems in Legacy Projects

How do we escape the “vicious cycle” of legacy code? We have no tests (or have poor tests). Any change is risky and needs manual testing. We’re rushing to meet delivery deadlines - producing code, but there’s no time for tests.

Legacy codebases are expensive to maintain because:

Unit tests are missing. Consequently, developers have to debug the code to verify that it works correctly - this is a manual time-consuming process.

Unit Tests are ineffective. The tests are not protecting against regression bugs and are hard to read, so developers have to spend a lot of time maintaining tests.

Source Code is unmaintainable. The source code is a “big ball of mud”, low modularity, high cyclomatic complexity, so developers have to spend a lot of time reading and understanding existing code in order to make changes.

The team tries a 2-5 day TDD Training - and fails!

At some point, company leadership decides to introduce Unit Tests (and TDD). They hire some trainers to deliver some 2-5 day training about Unit Testing & TDD for all the developers.

Leadership expects that after this delivery will be faster and bug count will decrease.

But that does NOT happen!

Actually, the opposite happens - slower delivery and more bugs. It’s a disaster! The developers also lose motivation and say “TDD doesn’t work”.

During this webinar, we’ll discuss how to actually introduce TDD successfully. The transformation requires transitioning towards testable architecture, writing effective tests and refactoring code, in a test-last approach. After these foundations, we can start the adoption of TDD.

TDD Transformation

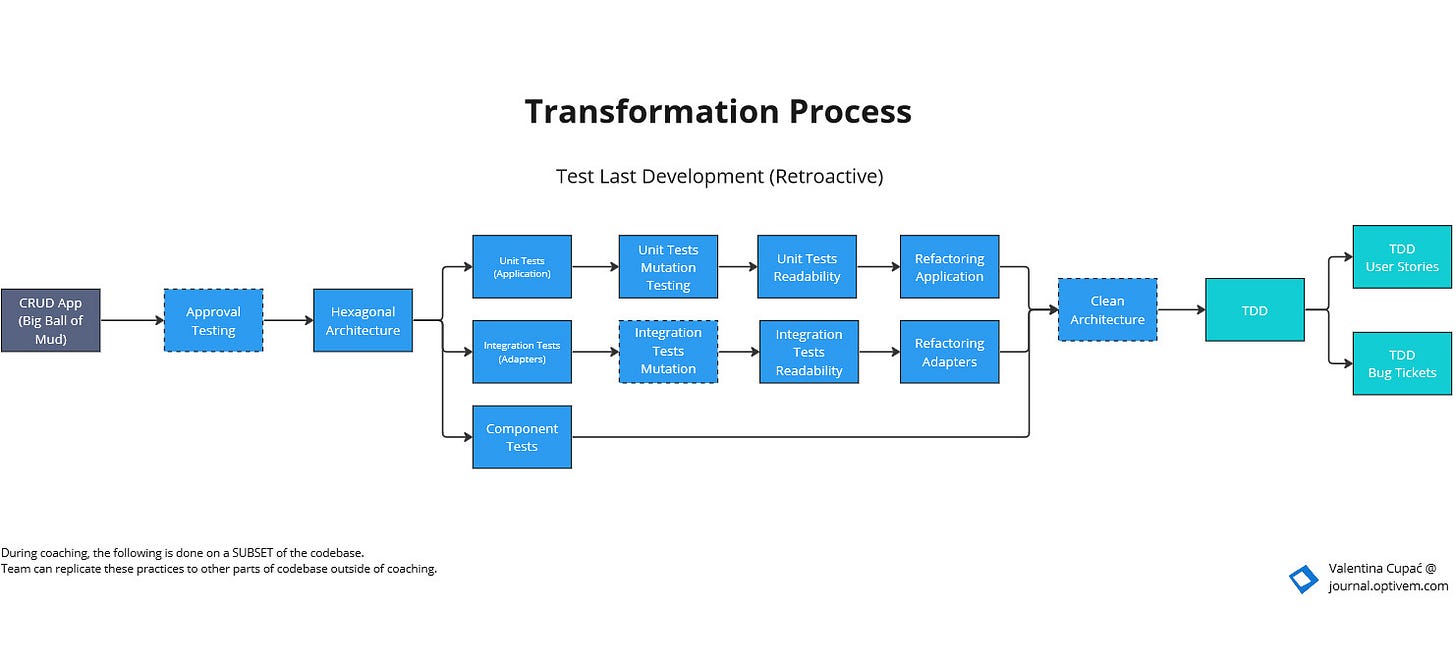

The following reflects a skeleton approach used by Valentina during Technical Coaching:

Stage 1: Effective Test Last Development (TLD)

During coaching, the following needs to be done on a subset of the existing codebase and test suite. This can then be replicated across the codebase.

Testable Architecture

We cannot write unit tests at all if the architecture itself is not testable. We need to have boundaries between business logic and infrastructure. This necessitates Hexagonal Architecture at minimum.

Effective Tests

Unit Testing - We need to have Unit Tests targeting the Application (Hexagon). The team may or may not have any Unit Tests yet. Some teams don’t have any Unit Tests, some may have unit tests with high Code Coverage. But are those Unit Tests protecting us against regression bugs? We can run Mutation Testing, and increase Mutation Coverage to 100% on a subset of the codebase (so that we will be able to safely refactor that subset of the codebase). Next, even after achieving 100% Mutation Coverage that itself is not enough - are the tests readable as specifications? This necessitates writing tests that express behavior rather than implementation details.

Integration Testing - Unit testing is not enough. We need Integration Testing to test at the boundaries - integration testing the Driver Adapters (REST API) and integration testing the Driven Adapters (Database Integration, Third Party System Integration).

Component Testing - We can test the whole microservice (connectivity), in isolation from external microservices. This gives us assurance that everything is wired up correctly.

Effective Code

Clean Code - We need to be aware of what Clean Code looks like.

Code Smells - We need to be able to recognize Code Smells. This can be done via static code analysis tools and via human review.

Refactoring - In order for code to be refactored, we need to be sure that our tests protect us against regression bugs, so that we can perform refactoring in a safe way. So for parts of the codebase covered with 100% mutation score, it is safe to refactor that code. So wherever we identify Code Smells, we can refactor it towards Clean Code. We practice incremental refactoring on existing code, and relying on tests to ensure that refactoring doesn’t break behavior.

Stage 2: Effective Test Driven Development (TDD)

In the above stage, we had gained practice in the following steps:

Writing Effective Tests

Refactoring to Clean Code

Previously we used TLD, whereby code is written first and tests are written later, and it’s generally done in a non-incremental way (i.e. write lots of code, then a batch of tests). Now it’s time to reverse the sequence (writing tests before code), and doing it incrementally (test, code, test, code), also with refactoring (test, code, refactor - RED, GREEN, REFACTOR).

We practice alternating between behavioral vs structural changes.

By practicing TDD on real User Stories and Bug tickets, we can see how to effectively apply it in real day-to-day work.

Summary

In order to make the transformation above, gaining the skillsets for Stage 1 can be practiced on a subset of the existing codebase, it could be done incrementally in a sliced approach. Stage 2 would be practiced based on actual User Stories and Bug tickets within ongoing sprints.

YouTube Webinar

You can watch the whole recording here:

Epilogue

Thanks to J. B. Rainsberger comments, I updated some words I used above, more specifically usage of the word “could“.

The essence is as follows:

The WHAT

Effective TDD is dependent on Foundations (Testable Architecture, Effective Tests and Effective Code). This means, if we’re missing any of those foundations, then we are NOT practicing effective TDD.

The HOW

Gaining skillsets in those Foundations (Testable Architecture, Effective Tests, and Effective Code) could be achieved either with Test Last or with TDD.

For Greenfield Apps:

Could start with TDD directly. Through TDD we’d learn about testability (which is a side effect of writing unit tests TDD-style, impossible to write untestable code) and we’d learn about Effective Tests (TDD naturally results in both high Mutation Score and tests which are readable as executable requirement specs) and Effective Code (we practice getting to clean code as part of refactoring)

For Brownfield (Legacy) Apps:

Preparation: The following is a “bare minimum“ that must be done before applying TDD to the codebase (or a functional segment of it). First of all, we need to ensure that we meet the condition of testability (Testable Architecture), because otherwise we can’t write unit tests. Often legacy apps are untestable, but before we make it testable (introducing abstractions over I/O, etc.) we first need to have some form of Approval Tests as protection. Then, after we achieve the testability, the other prerequisite before applying TDD is we need to have a high Mutation Coverage on the existing codebase (or the functional segment of the codebase on which we’ll be applying the TDD). Having a high Mutation Coverage means that we have regression bug protection (which is one aspect of Effective Tests). After the Preparation is done, we have two pathways:

Pathway 1: Practice skillsets of Effective Tests and Effective Code on the existing codebase. Through this, the team can feel the pains of the existing test and existing codebase. Since this practice is done retroactively, we aren’t working on any existing User Stories and Bug tickets, then there is no impact on delivery either, so team can go at their pace. After the team is comfortable, then they can start to practice TDD.

Pathway 2: Don’t practice Effective Tests and Effective Code on the existing codebase, but rather just jump straight into TDD. As part of TDD, in the RED step, when we’re modifying existing tests or writing new tests, we can practice how to write the tests effectively. As part of TDD, in the GREEN step, we can practice refactoring to Effective Code. Impact:

The difference in impact:

Pathway 1 - Impact: Team will more strongly feel the pain of Test Last because they now have to retroactively improve “bad“ tests (e.g. tests that are unreadable, coupled to implementation details) which can pave the way towards TDD later as a way to avoid this pain. Furthermore, there is no impact (or minimal impact) on existing delivery because the team is practicing these skillsets retroactively on existing code, not on new User Stories, hence even if it takes longer time to gain the practice, with timeboxing it means we don’t have impact on their existing delivery. when the team is comfortable with these practices (and becomes fast/proficient) then moving onto TDD.

Pathway 2 - Impact: We directly jump in approach which makes skillsets more “natural“ to grasp. For example, writing readable tests coupled to behavior tends to be easier with TDD (compared to TLD). However, there is also here a much earlier impact on delivery. These skillsets are now no longer timeboxed, but do affect existing delivery as we would be directly applying TDD to new User Stories. The time taken to apply TDD to the new User Stories is that time AND the time it takes to ensuring we have high Mutation Coverage (killing mutants) on the codebase that is functionally affected.

The essence is in the WHAT, and the HOW shows the different pathways to achieving it. Thus, I do agree with the conclusion from J. B. Rainsberger:

You won't transition to TDD as daily practice until you've developed these other skills/conditions, and you don't _have to_ struggle with TDD in order to do that."

Exactly, we can’t practice TDD effectively without the foundational skillsets. The foundational skillsets could be practiced even in the existing Test Last approach or directly via TDD.