ATDD: 90% test automation with AI

“We spent so much time on maintenance when using Selenium, and we spend nearly zero time with maintenance using testRigor.” - Keith Powe, VP of Engineering, IDT

👋 Hello, this is Valentina with the free edition of the Optivem Journal. I help Engineering Leaders & Senior Software Developers apply TDD in Legacy Code.

This post is sponsored by testRigor, the #1 Generative AI-based Test Automation Tool.

The problem with Manual QA Testing

Many teams are still stuck with manual regression testing. QA Engineers document test procedures in Word, Confluence, etc., and spend most of their time executing tests. This is repetitive, slow, and error-prone.

For example, a two-week manual QA cycle often repeats: QA finds regression bugs → developers fix the regression bugs → QA retests → QA discovers new regression bugs. This can continue indefinitely. Consequently, these problems occur:

Slow Delivery - Manual regression testing cycles take too long, which delays the entire delivery process. Business is frustrated, blaming IT for being too slow.

Production Bugs - Due to release deadlines, timeboxed testing means some tests are skipped, letting regression bugs reach production.

Compliance Risks - In highly regulated industries (e.g., medical/healthcare), manual screenshot documentation slows down QA and risks human error and non-compliance.

Salary Costs - As the software grows, the number of regression bugs increases. Companies have to hire more QA engineers just to keep up, but it’s never enough.

Why Test Automation is too expensive

Attempt 1: E2E Tests with Selenium (or Playwright, Cypress, etc.)

Companies may buy record-and-play tools that generate Selenium E2E tests, or their QA Automation Engineers write Selenium tests by hand.

But these tests are fragile since they’re tightly coupled to implementation details. Small UI changes (e.g., changing a button’s CSS) can break many tests, forcing QA Automation Engineers to spend a lot of time fixing up those failing tests, leaving little time to test new functionality. For example, a company may have automated 30% of tests in the first year, 35% of tests in the second year, but then stalled because maintenance costs were too high.

Attempt 2: E2E Tests with AI Tools

Writing and maintaining Selenium Tests was too expensive, so teams turned to AI tools that looked promising - you could write the tests in plain English, instead of Selenium. Manual QA Engineers could automate tests without QA Automation Engineers.

But those AI tools may lack abstraction, i.e., be coupled to UI implementation details (XPath or CSS Selectors), so UI changes break the tests.

Even worse, when the tool can’t find a UI element, it falls back to using AI. But AI can hallucinate—generate incorrect or misleading test results that require human intervention to fix—which can be more expensive than manual testing. For example, AI can give the wrong coordinates of an element.

Why Test Automation doesn’t solve high rework cost

Traditional test automation happens after code is written. If QA discovers developers misinterpreted specs, it’s too late. QA Automation Engineers end up writing tests that follow the implementation, even though it’s wrong. When misinterpretations are caught, developers have to fix the code, and QA Automation Engineers have to play catch-up by updating the tests.

Meanwhile, the team is working on the next sprint, so the QA Automation Engineer is now both catching up on existing features and writing tests for new ones. This is not sustainable.

ATDD is the solution

Tests must be abstracted away from implementation details. We want to write tests in plain English — not tied to CSS selectors or XPath.

Tests should be written before coding starts. We want to ensure that the whole team (PO, Developers, QA Engineers) is aligned on the specifications before coding begins, to avoid costly rework later.

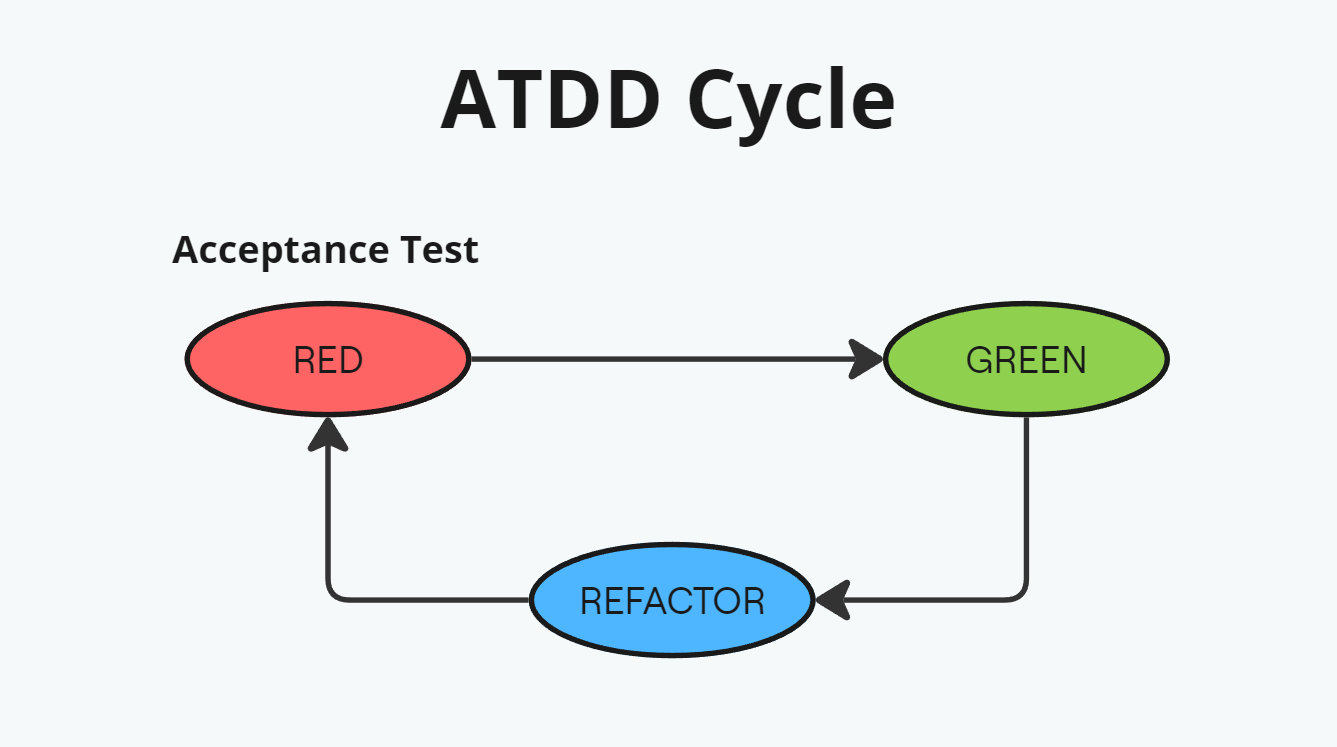

With ATDD, we start with a specification, convert it into an executable specification (acceptance test), and write code to make the tests pass. Thus, with ATDD, test automation is done before coding. You write executable specifications in plain English, so Product Owners can review and correct them before the developers start coding. This step alone can save 30–35% of engineering time due to precise requirements.

ATDD with the Four Layer Model

The most well-known approach for ATDD uses Dave Farley’s Four Layer Model. Tests are written in a DSL decoupled from implementation, thus the tests are readable as requirements. The DSL calls Drivers, e.g., Web UI Drivers (using Selenium with XPath/CSS), API Drivers (using HTTP Client), Mobile App Drivers, CLI Drivers, etc. Furthermore, by annotating tests with channels, we can write a test once and execute it against multiple channels.

Designing this maintainable Acceptance Test Architecture requires a strong design skillset and time investment. Anyone can write these tests (e.g., QA Engineer), but Developers need to maintain the “plumbing” (DSL, Channels & Drivers) and fix test failures. In case of changes to the UI, Developers need to update Drivers without having to change tests; this helps reduce maintenance costs.

The reality is that in many teams, executives want results “right now”; they want to immediately convert their whole Manual QA Test Suite into automated testing. This can’t be done overnight with the Four Layer Model.

ATDD with testRigor

In testRigor, tests are written in plain English, decoupled from UI implementation details. Manual QA Engineers can write the tests themselves. There’s no “plumbing” code, which means that (1) Developers and QA Automation Engineers aren’t needed to write tests, (2) when developers change the UI, the tests don’t have to be changed.

When adopting ATDD with testRigor, QA Engineers write a failing test in plain English, and developers can write code (or let testRigor generate code) to make the test pass.

Note: testRigor usually detects UI elements from plain English steps. If it fails, you can add custom code, creating “plumbing” to maintain. The amount of maintenance needed depends on your product and how often custom detection is required.

Phase 1 - Migrating Manual Tests to testRigor

Manual QA Engineers can import their Manual Test Procedures into testRigor (which recognizes plain-English steps). We can connect testRigor to our test source (e.g., JIRA / TestRail, etc.), or we can create the tests ourselves in testRigor.

Note: If we do not have documented test cases, we can use testRigor to generate test cases by providing our application URL and a description of the application domain. Then testRigor uses AI to generate the test cases.

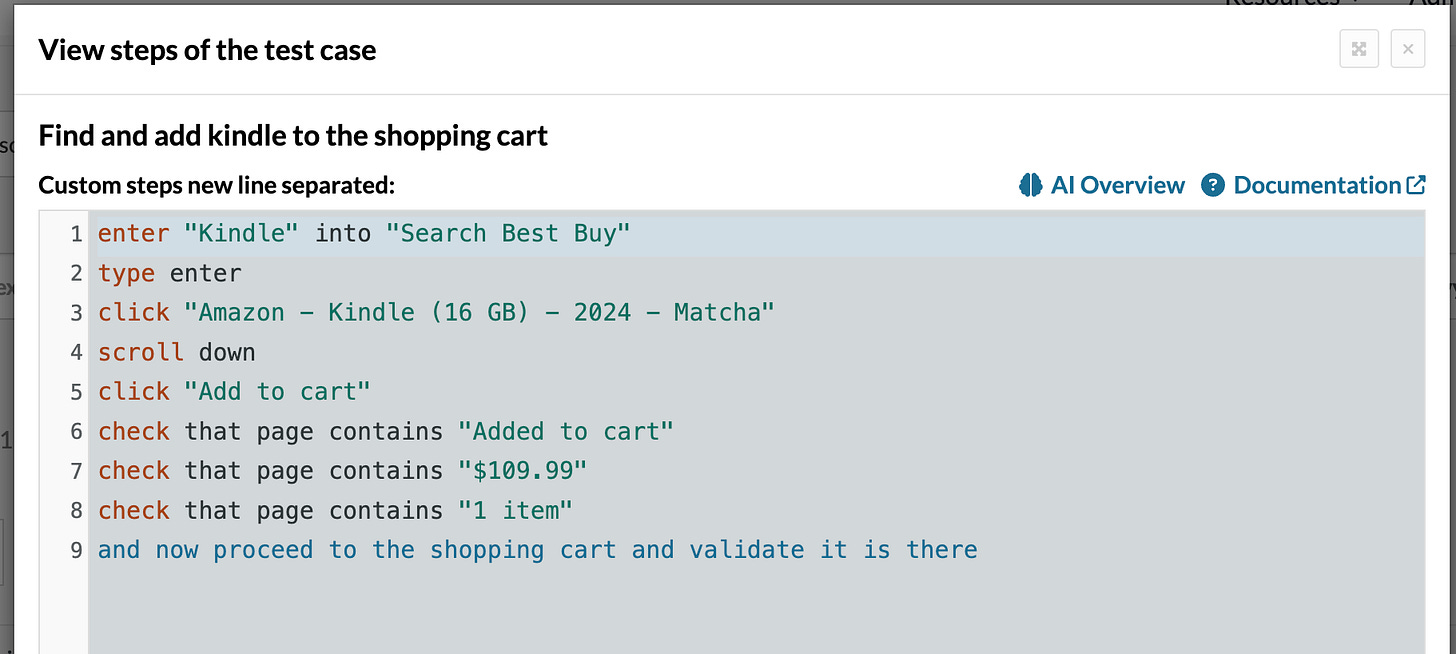

We can view and edit the imported tests, e.g., ‘Find and add Kindle to the shopping cart’:

Above, several steps were successfully recognized by testRigor, and are thus able to be executed deterministically (no AI involved).

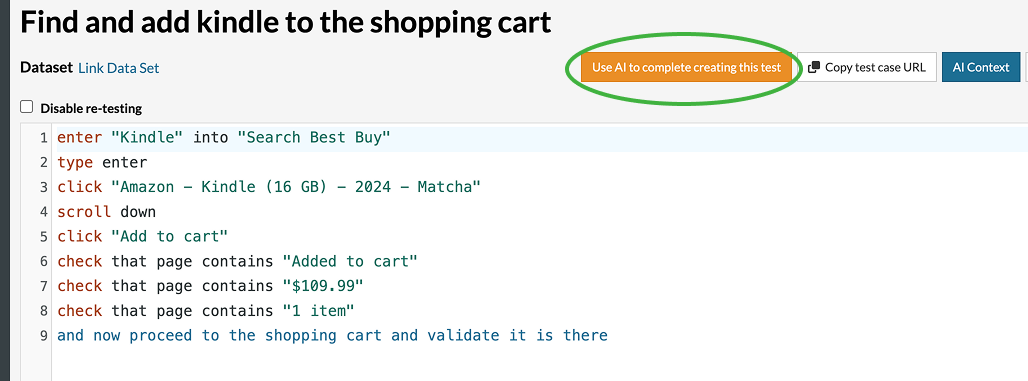

However, when a step is not recognized (e.g., the last one), we can fall back to AI by clicking “Use AI to complete creating this test”:

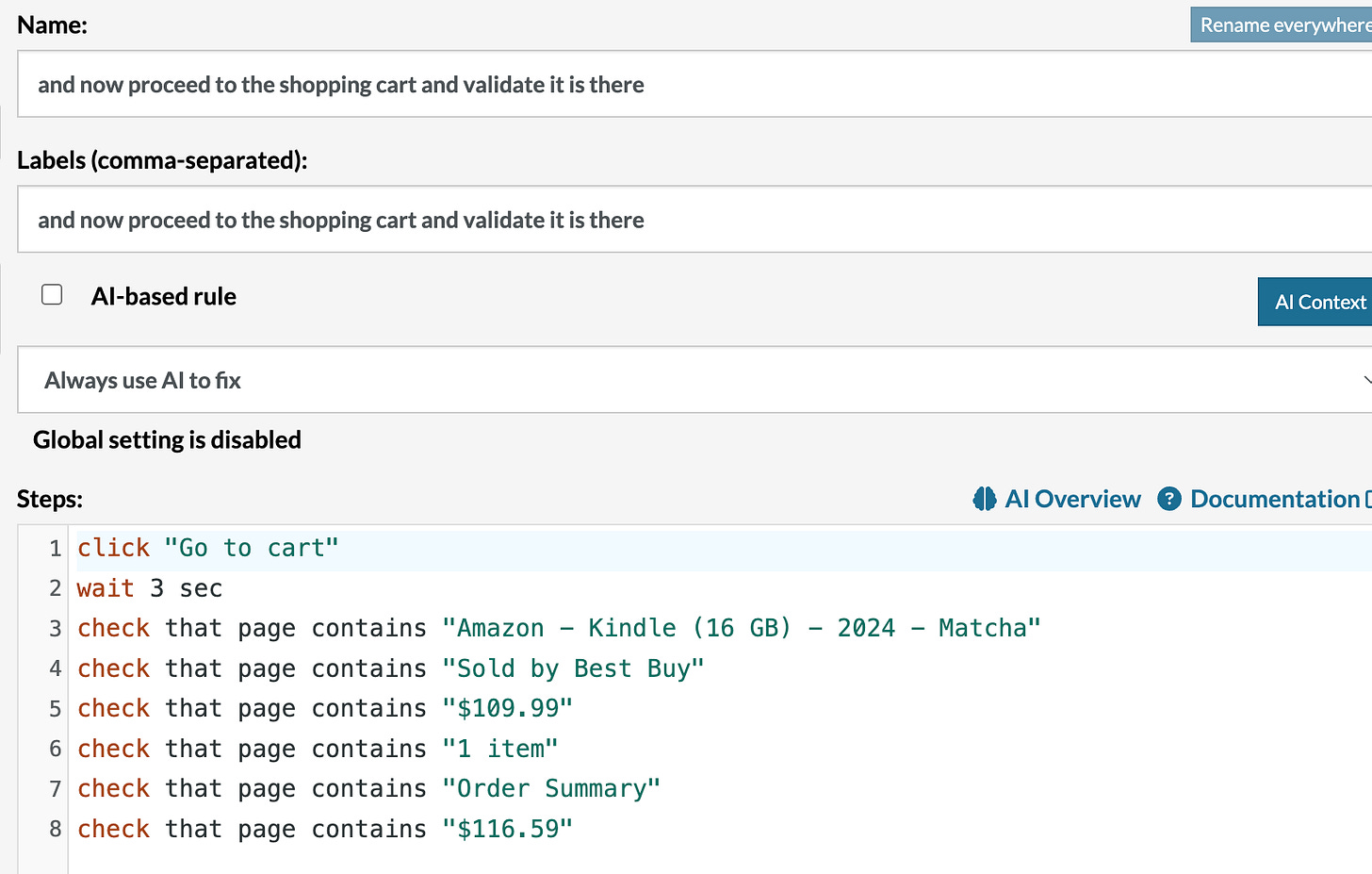

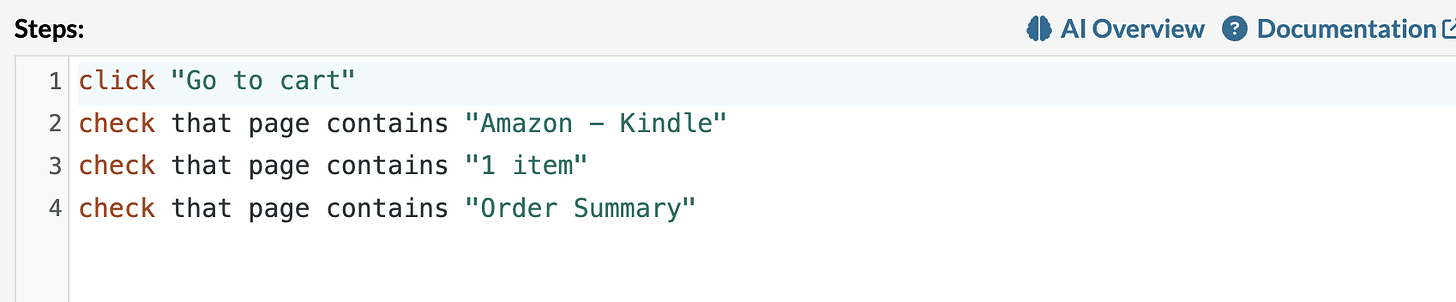

Then AI generates UI steps for the unrecognized custom step above:

The QA Engineer can review and correct the generated steps. When AI “hallucinates”, the QA Engineer can manually correct steps. After the QA Engineer’s cleanup, here’s the updated test:

The test passes!

Note: This new step becomes a reusable step, recognized when parsing further Manual QA test procedures, even if sentences aren’t identical.

To summarize, we import a batch of Manual Test Procedures, clean them up, and then repeat with the next batch. This means we can quickly automate a high percentage of Manual Tests.

Phase 2 - Applying ATDD with TestRigor

RED - Write the failing test

For a user story, write testable acceptance criteria. Based on this, the QA Engineer writes a test in plain English, and inputs it into testRigor. As testRigor executes the test, the QA Engineer can edit custom steps. If AI hallucinates, the QA Engineer can make corrections and clean up the steps, then ask AI to continue until the test is successfully written down.

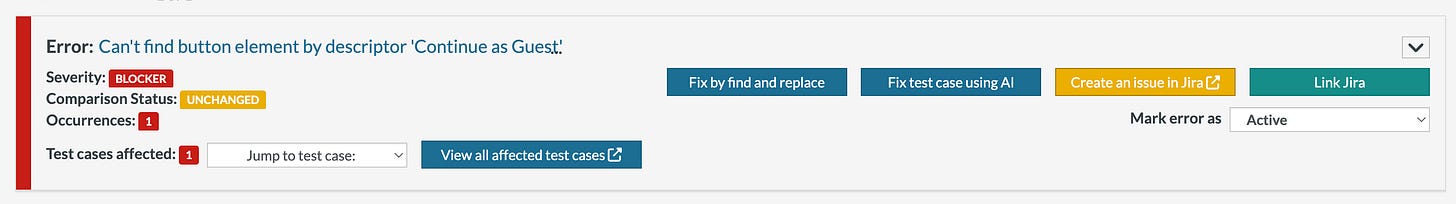

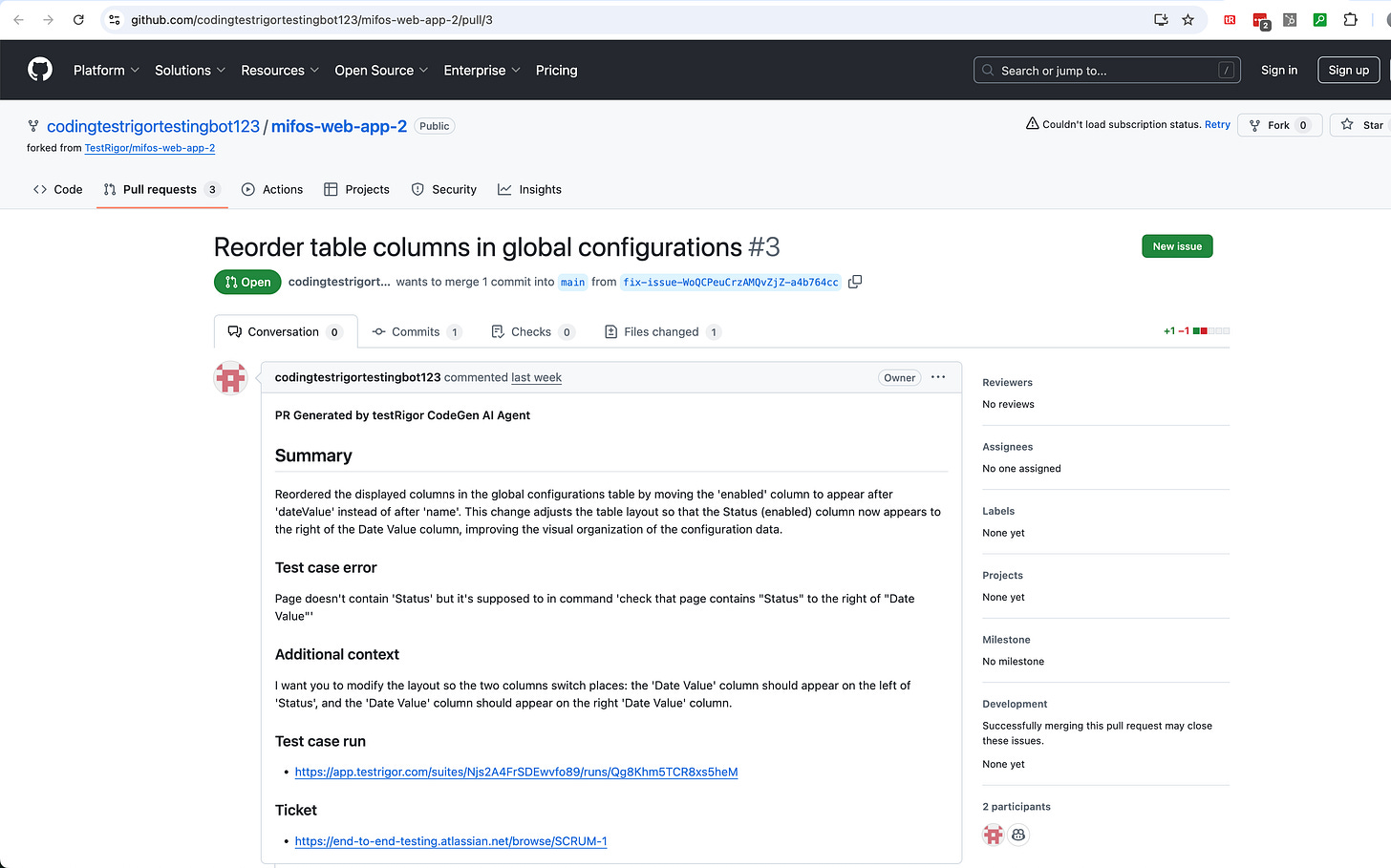

When testRigor runs the test, it should fail because the functionality isn’t implemented yet - causing testRigor to report an error:

Note: When writing Acceptance Tests, we need to stub out external systems. To support us, testRigor provides a way to mock APIs on the network, see mocking api calls.

GREEN - Write the code to make the test pass

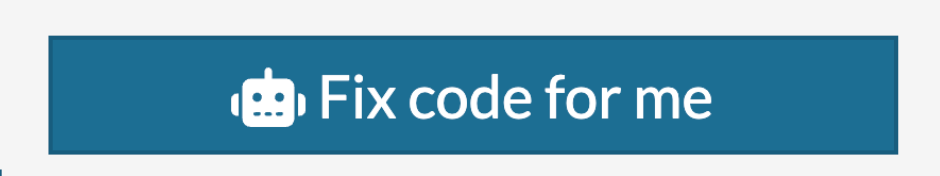

We can use testRigor to generate code to make the tests pass. For a failing test, click “Fix code for me” so that AI can generate the necessary code:

testRigor generates code and checks if the test passes. If the test still fails, testRigor retries until the test passes. Finally, the test passes:

REFACTOR - Refactor the code

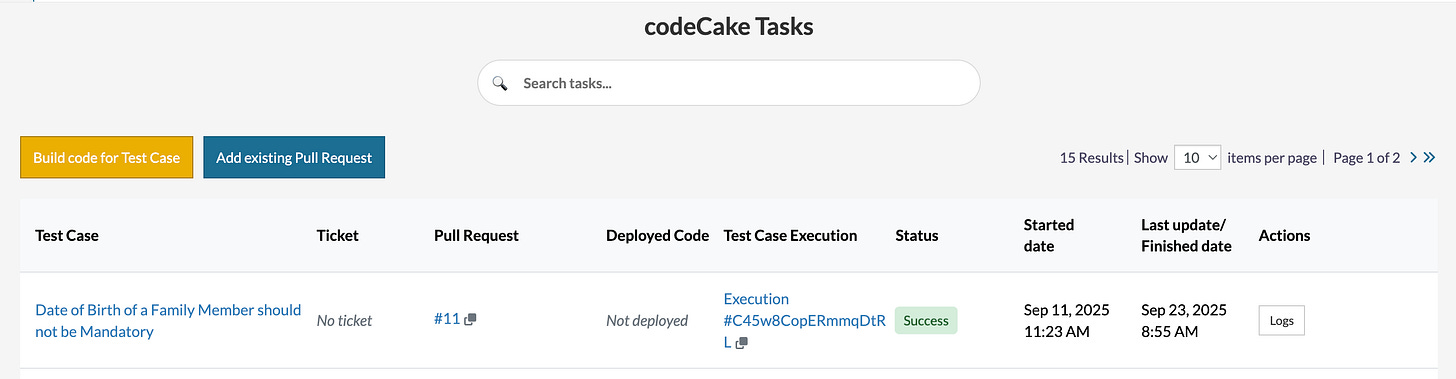

After the test passes, testRigor creates a PR for a developer to review the generated code. The developer may review and/or refactor the code.

How can you overcome AI limitations with testRigor?

testRigor is fully AI-first, letting QA Engineers correct hallucinations in plain English and continue building tests. Its AI framework handles hallucinations automatically for single-step actions, enabling testing of charts, diagrams, AI features, and previously untestable apps like Flutter, Citrix, Oracle EBS, and games.

You don’t need to learn any prompting techniques - testRigor will do it for you. All you need is to be crystal clear on what exactly needs to be done. testRigor will do all the AI tricks for you and will correct the hallucinations in 99.95% of the cases for one-step scenarios.

What are the time savings with testRigor?

Development time is reduced:

testRigor enables ATDD, and because there is no reliance on implementation details, you can write tests before developers write code → using ATDD saves 35% of development time on rewriting by reducing requirement gaps

testRigor generates code until the test passes and creates a PR for developers to review, so they can interact with it like with another developer by providing code reviews

QA Time is reduced:

testRigor lets QA Engineers import manual tests from test management systems

testRigor lets Manual QA Engineers build test automation 4.5 times faster than professional QA Automation Engineers (see IDT Case Study)

QA time is reduced because testRigor generates test steps (based on the test description inputs by the QA Engineer), and then the QA Engineer reviews the test

Test maintenance is almost eliminated since tests don’t rely on implementation details

Compliance risks are reduced:

testRigor is HIPAA compliant, esp. helping medical companies automate 21 CFR Part 11 Compliance 4 - enabling automation on all media, including Web, Native Mobile, Native Desktop, Mainframes, API, Phone call, Text messages, 2FA authentication, etc

How IDT achieved 90% automation with testRigor?

IDT achieved 90% automation in one year using testRigor. You can watch the video How IDT was able to achieve 90% automation in under a year with testRigor and read the case study How IDT Corporation went from 34% automation to 91% automation in 9 months.

Try out testRigor

👉 Request a demo — and see how testRigor can help your team automate manual tests and start with ATDD.

Use the code “VALENTINA42” to get a 10% discount.

This post is sponsored by testRigor, the #1 Generative AI-based Test Automation Tool.

How can manual QA Engineers start with testRigor?

Thanks for publishing this, Valentina. I always learn a lot from your writing on ATDD and TDD. It's always valuable to see your perspective on these concepts.

I'm very much in favour of the Four Layer Model (which I originally learned from Dave Farley and have seen practical real-world examples from your talks/writings) and have seen its benefits in terms of decoupling and reduced maintenance.

Reading this piece about ATDD with testRigor, I am trying to understand the context:

1. From the outside, it seems that a lot of the plumbing lives inside testRigor's platform rather than in a Four Layer architecture that the team owns. Is that a fair way to look at it?

2. You mention that many executives want results 'right now' and that converting a whole manual test suite can't be done overnight with the Four Layer Model. In that context, do you see tools like testRigor mainly as a short-term, pragmatic option under delivery pressure, or as a long-term solution for teams that could or should invest in a Four Layer Model? If I simply put, for which kinds of teams/products would you lean towards a tool like testRigor?

I am asking because you know better than anyone how much energy, effort, and investment it takes to scale these practices inside a team. Today it's getting harder to explain the value of these investments when AI can generate code and tests with relatively little effort.

Do you think it would help readers if this context: when you would reach for a tool like testRigor versus the Four Layer Model, were made more explicit?

I would love to hear how you frame these trade-offs when you work with teams.