🏆 TDD in Legacy Code - Pipeline (Summary)

Legacy Code often doesn't have a Pipeline. That's why we have built a Pipeline, so that we have the foundation for automated test execution to support TDD.

👋 Hello, this is Valentina. I help Engineering Leaders & Senior Software Developers apply TDD in Legacy Code. This article is part of the TDD in Legacy Code series. To get these articles in your inbox every week, subscribe:

Congratulations! You’ve now completed your Pipeline setup (TDD in Legacy Code series), which is an important prerequisite before we get started with TDD. Let’s recap what we’ve built up to now:

Why did we build a Pipeline before starting TDD?

Legacy Code often lacks a Pipeline, and instead, the delivery process is manual—manual releases and manual testing.

What would happen if a team attempted to start TDD on a Legacy Project - but without a Pipeline?

Problem 1. Inconsistent Component Level Test results. Component Level tests include Unit Tests, Narrow Integration Tests, Component Tests & Contract Tests. If there is no Pipeline, a developer might write these tests, and get them to pass locally (as part of the GREEN step in TDD), and commit the code, but then when another developer pulls the source code, it fails on their machine! So on one developer’s machine the tests pass, yet on another developer’s machine it fails! (Of course, there is a deeper issue there with the tests, but it's now blocking the team because there's no Pipeline that detected as issue with the test)

Problem 2. Can’t effectively run System Level Tests. System Level tests include Acceptance Tests & Contract Tests, Smoke Tests & E2E Tests. When there’s no Pipeline, to run any automated system tests, you first need to manually deploy the System. This is time-consuming and error-prone, hence we’re limited how frequently we can run those tests. And even when we run them, if there’s an error, we don’t know if it’s a deployment issue or something else.

We needed a Build Server so that tests can be verified independently of the developer’s local machine (solving Problem 1) and that we can run slower tests (solving Problem 2). We needed a Pipeline to get feedback if any tests are red on the Build Server, even though they might be green on the Local Machine (solving Problem 1), and that we could automate deployment to enable System Level (solving Problem 2).

Thus, before introducing any tests (and TDD), we must ensure we have a Pipeline. A Pipeline is central to Continuous Delivery, enabling us to test our release candidates to ensure that we can safely and quickly release to production.

Pipeline Architecture to support TDD

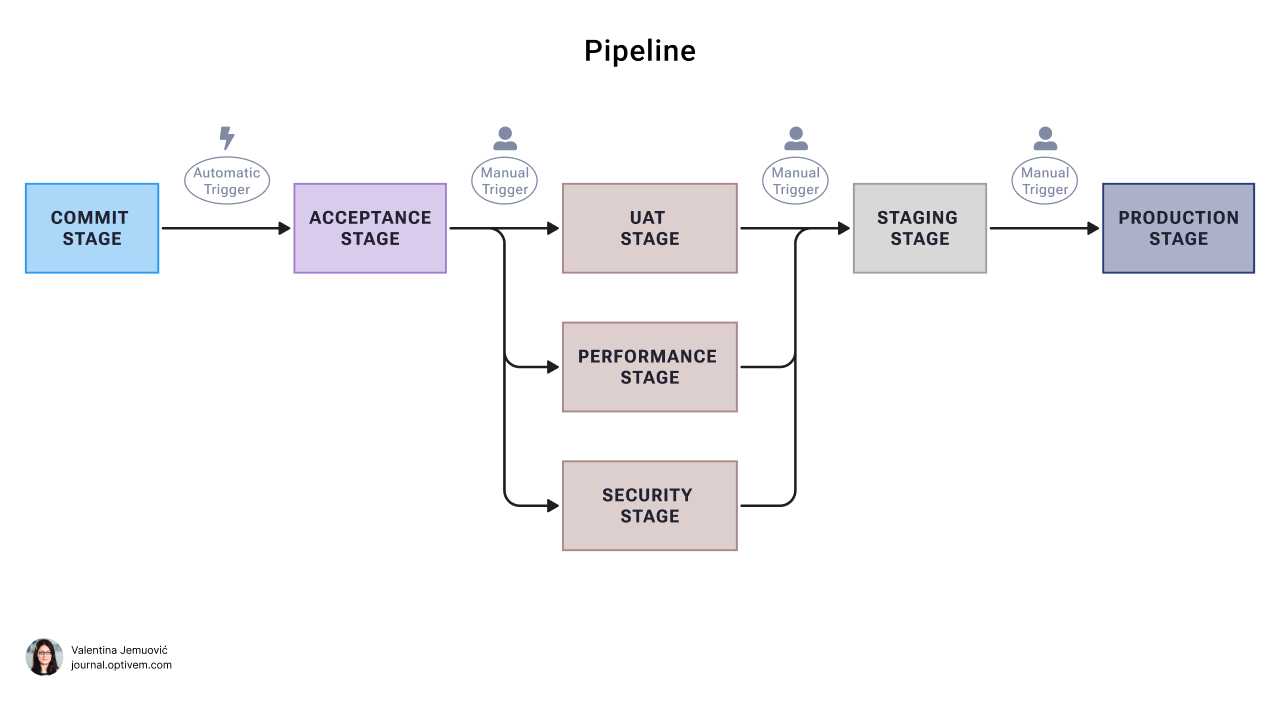

We had set the following Pipeline Architecture:

✅ Repositories

✅ Environments

✅ Pipeline

Let’s summarize what we’ve learnt up to now:

1. Repositories

Component Repositories

We’ve set up Component Repositories:

Frontend Repository

Microservice #1 Repository

Microservice #2 Repository

Microservice #3 Repository

In each Component Repository, we added test placeholders for Component Level Tests:

Unit Tests

Narrow Integration Tests

Component Tests

Contract Tests

System Level Test Repository

Furthermore, we setup a repository for System Level testing:

System Test Repository

In the System Test Repository, we added test placeholders for System Level Tests:

Acceptance Tests

External System Contract Tests

Smoke Tests

E2E Tests

2. Environments

Automated Test Environments

Acceptance Environment - for executing Acceptance Tests

E2E Environment - for executing External System Contract Tests & E2E Tests

Performance Environment - for executing Performance Tests

Security Environment - for executing Security Tests

Note: We covered Acceptance Environment and E2E Environment, which are focused on automated functional testing. Furthermore, you can have environments for non-functional tests, e.g. Performance Environment and Security Environment.

Manual Test Environments

UAT Environment - for executing Manual QA Tests

Release Environments

Staging Environment

Production Environment

3. Pipeline

Pipeline Stages

The Pipeline has these Stages:

Commit Stage - running Component Level Tests (Unit Tests, Narrow Integration Tests, Component Tests, Contract Tests) and publishing Docker Images

Acceptance Stage - deploying Docker Images to Acceptance Environment and running Smoke Tests & Acceptance Tests; deploying Docker Images to E2E Environment and running Smoke Tests, External System Contract Tests & E2E Tests

UAT Stage - deploying Docker Images to UAT Environment, running Smoke Tests, so that then QA Engineers can do Manual QA Testing

Performance Stage - deploying Docker Images to Performance Environment, running Smoke Tests, running Performance Tests

Security Stage - deploying Docker Images to Security Environment, running Smoke Tests, running Security Tests

Staging Stage - deploying Docker Images to Staging Environment, running Smoke Tests

Production Stage - deploying Docker Images to Production Environment, running Smoke Tests

Note: We didn’t cover the Performance Stage, Security Stage, or Staging Stage; you can optionally add them if you need them.

Pipeline Dashboard

Note: You can also add Performance Stage, Security Stage, Staging Stage between the UAT Stage and Production Stage.

What’s Next?

As part of the Pipeline phase, we’ve completed:

I know that Pipeline setup was not the most exciting part, but now we’re ready to get to the fun part! In our upcoming articles, we’ll be learning about:

Testable Architecture: How do we make Legacy Code architecture testable so that it can support testing?

Test Last Development: How do we effectively write tests retroactively in a Test-Last approach?

Test Driven Development: Finally, how do we switch to Test-Driven Development?

You can access the full series here: 👇👇👇

Is it possible to do TDD without a pipeline?

Hi Valentina, a question :

"UAT Stage - deploying Docker Images to UAT Environment, running Smoke Tests, so that then QA Engineers can do Manual QA Testing."

If I only run UAT stage on a docker image that has only passed acceptance stage. Then do we still need to do smoke test? because acceptance stage will do it anyway in acceptance environment. Then running them again in UAT stage or other stages is like running same test twice. Can we skip that?